In an era where digital agility drives competitive advantage, organizations face a pivotal choice in how they deploy and manage their computing resources. The debate centers on three primary models—on‑premises, cloud, and hybrid infrastructure—each offering unique benefits and trade‑offs. Understanding these distinctions is critical for decision‑makers aiming to balance cost, performance, security, and scalability.

1. Defining the Models

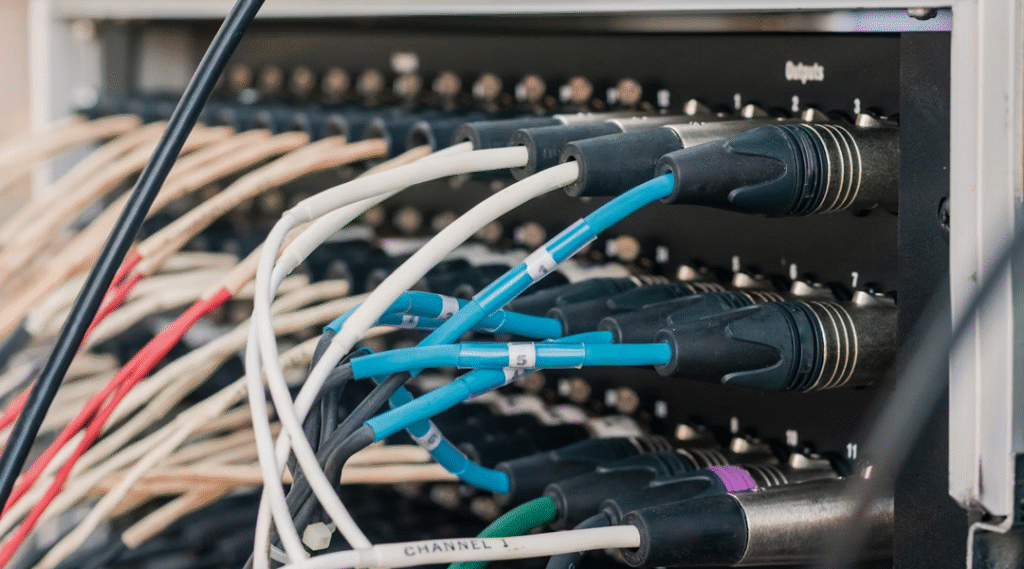

- On‑Premises: This traditional approach involves hosting servers, storage, and networking equipment within an organization’s own data center or server room. The enterprise maintains full control over hardware, software, and security protocols.

- Public Cloud: Computing resources are provided on a pay‑as‑you‑go basis by third‑party vendors (e.g., AWS, Azure, Google Cloud). Infrastructure is abstracted into virtualized services, enabling rapid provisioning without upfront capital expenditure.

- Hybrid Infrastructure: A blend of on‑premises and public cloud environments, interconnected via secure networking. Workloads can be dynamically shifted between environments based on policy, performance requirements, and cost considerations.

2. Cost Considerations

- CapEx vs. OpEx: On‑premises deployments require significant capital expenditure for hardware acquisition, data‑center construction, and ongoing maintenance. In contrast, public cloud transforms costs into operational expenditure, with granular billing by the hour or second for compute, storage, and network usage.

- Total Cost of Ownership (TCO): Although cloud eliminates hardware refresh cycles, overprovisioning and “cloud sprawl” can inflate bills. On‑premises can be more cost‑effective for predictable, high‑utilization workloads but carries hidden costs—power, cooling, real‑estate, and skilled staff.

- Hybrid Cost Optimization: The hybrid model allows for strategic placement of steady, latency‑sensitive workloads on‑premises, while bursting variable demand into the cloud, achieving both cost control and agility.

3. Performance and Scalability

- On‑Premises Performance: Provides direct, low‑latency access to hardware with no noisy‑neighbor issues. Ideal for real‑time analytics, high‑performance computing (HPC), and latency‑sensitive applications.

- Cloud Elasticity: Enables virtually limitless scaling; organizations can spin up thousands of virtual machines in minutes. Auto‑scaling features adjust resources to meet demand spikes, ensuring consistent user experience.

- Hybrid Flexibility: Critical for businesses with seasonal workloads—e.g., retail peaks during holidays—where baseline capacity is handled in‑house and excess demand is offloaded to the cloud.

4. Security and Compliance

- On‑Premises Control: Full ownership of the security stack—physical access controls, network segmentation, and custom encryption measures—can simplify compliance with strict regulatory regimes (e.g., HIPAA, GDPR).

- Cloud Shared Responsibility: Cloud vendors secure the underlying infrastructure, while customers are responsible for operating system security, data encryption, identity and access management (IAM), and configuration hygiene. Misconfigurations have led to high‑profile breaches.

- Hybrid Governance: Enables sensitive data to remain on‑premises under tighter controls, while less‑critical or anonymized workloads run in the cloud. Unified policy engines and hybrid identity solutions help maintain consistent security posture.

5. Management and Operational Overhead

- On‑Premises Staffing: Requires specialized personnel for hardware maintenance, network administration, cooling systems, and power management. Downtime risks rise without 24×7 support.

- Cloud Automation: Offers managed services—database as a service (DBaaS), serverless computing, container orchestration—that offload routine tasks, allowing IT teams to focus on innovation rather than infrastructure upkeep.

- Hybrid Orchestration: Increasingly, tools like VMware Cloud Foundation, Azure Arc, and AWS Outposts blur the lines, providing centralized dashboards and policy‑based automation across both environments.

6. Use Cases and Decision Framework

- On‑Premises is best for established enterprises with predictable workloads, stringent latency requirements, or massive data‑gravity concerns (e.g., financial services, on‑site manufacturing control systems).

- Public Cloud shines for startups, SaaS providers, and rapidly scaling applications where time‑to‑market, global reach, and minimal upfront investment are priorities.

- Hybrid Infrastructure suits organizations in transition—e.g., regulated industries adopting cloud for development and testing while keeping production on‑premises—or those needing disaster recovery, backup, and geo‑disaster resilience without duplicating full data‑centers.

7. Strategic Recommendations

- Perform a Workload Assessment: Classify applications by performance sensitivity, compliance needs, and growth projections.

- Align Costs with Business Outcomes: Model TCO scenarios over a 3–5 year horizon, factoring in indirect costs (staffing, facilities).

- Invest in Connectivity: Reliable, high‑bandwidth links (MPLS, SD‑WAN, dedicated cloud interconnects) are essential for hybrid success.

- Adopt DevOps and Infrastructure as Code: Standardize deployments, enforce security policies, and streamline updates across environments.

- Plan for Future Evolution: Consider emerging paradigms—edge computing, micro‑data centers, and AI‑driven optimization—to maintain competitive edge.

Final Thoughts

No single model universally triumphs; instead, organizations benefit most from a nuanced approach that matches infrastructure strategy to specific workload characteristics, regulatory constraints, and financial goals. By carefully evaluating the trade‑offs among on‑premises, public cloud, and hybrid solutions—and leveraging orchestration tools and cloud management platforms—enterprises can achieve the optimal balance of cost efficiency, scalability, and control in today’s fast‑moving digital landscape.

All articles on this special edition-DATA CENTER:

(#1) Inside the Digital Backbone: Understanding Modern Data Centers

(#2) From Vacuum Tubes to Cloud Campuses: The Evolution of Data Center Architecture

(#3) From Servers to Coolant: A Deep Dive into Data Center Core Components

(#4) Harnessing Efficiency: Overcoming Energy and Sustainability Hurdles in Data Centers

(#5) Cooling Innovations Powering the Next Generation of Data Centers

(#6) Safeguarding the Core—Data Center Security in the Physical and Cyber Domains

(#7) Decentralizing the Cloud: The Rise of Edge Computing and Micro Data Centers

(#8) Data Center: Cloud, On-Premises, and Hybrid Infrastructure

(#9) Intelligent Data Center Management and Automation

(#10) Market Landscape and Key Players in the Data Center Industry

(#11) Navigating Regulatory, Compliance, and Data Sovereignty in Modern Data Centers

As for in-depth insight articles about AI tech, please visit our AI Tech Category here.

As for in-depth insight articles about Auto Tech, please visit our Auto Tech Category here.

As for in-depth insight articles about Smart IoT, please visit our Smart IoT Category here.

As for in-depth insight articles about Energy, please visit our Energy Category here.

If you want to save time for high-quality reading, please visit our Editors’ Pick here.